Visual Music

Our process involves analysing an external audio source to create frequency visualisations with reactive video textures and 3D content in real-time.

These visuals are manipulated in virtual environments according to the mathematical principles behind cymatics. Our techniques are often used in collaborative projects.

Live audio-analysis

As audio is a requirement to drive our pipeline we usually work with live music. VDMX is used to analyse incoming audio frequencies captured with our Thunderbolt-powered (low-latency) soundcard or via an NDI (network) connection.

Reactive content

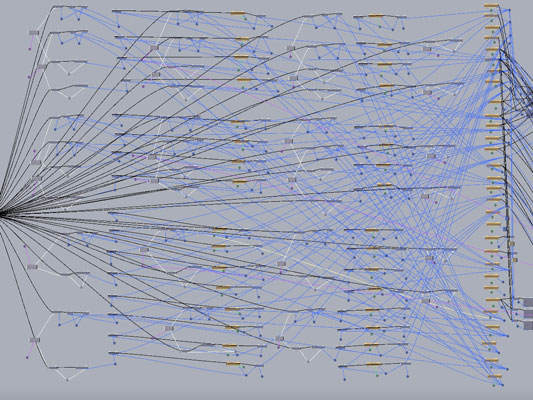

The audible spectrum is divided into 32 individual bands which we use to manipulate multiple layers of video textures. The audio-analysis data is also assigned (with uScript) to a range of shader parameters and animation values in 3D scenes we have built in Unity.

Real-time rendering

We use VDMX to process our video textures which are then shared with Unity via Syphon. This includes generating tessellation and normal maps in runtime for use with shaders in our 3D scenes.

High-definition video

Our GPU is capable of multiple (up to 6) video outputs which we use to projection map high-resolution content. We have Blackmagic PCIe cards which ensure broadcast quality video input and output. We also now have a dedicated server for improved live-streaming.

Dynamic live shows

The final video output of our process can be viewed on a wide range of platforms. Our audio-analysis techniques have also been implemented in collaborative projects with other visual artists.

View our gallery